We recently acquired a Nvidia GTX Titan graphical processing unit (GPU) for statistical computing at work, specifically double-precision floating point operations on the CUDA API. Before I lock it away in the server room I would like to see how it compares to my primary GPU at home – a Nvidia GTX 680, and my older GPUs – a pair of AMD Radeon HD 5770. This act of comparison is called benchmarking – running a number of standard tests and trials in order to assess the relative performance of a piece of hardware or software.

The reason why a GPU (also called a graphics card or video card) is preferred over a central processing unit (CPU) for certain mathematical or scientific applications is their ability to do highly-parallel stream processing. This feature evolved in GPUs as a way to perform thousands of graphics rendering tasks – such as shading polygons – very quickly by performing single instructions on multiple data simultaneously. Exploiting that power for other computing tasks is called general-purpose computing on graphics processing units (GPGPU).

Compute Unified Device Architecture (CUDA) is a parallel computing platform and application programming interface (API) model created by Nvidia in 2007. It can only be run on GPUs made by Nvidia.

The following table lists all of the Nvidia GPUs that are capable of more than one thousand billion double-precision floating point operations per second (1 Terraflop, or 1000 GFLOPS) according to the list of Nvidia graphics processing units on Wikipedia. Prices are in US dollars from Amazon in September 2015.

| Name | RAM | GFLOPS | Price |

|---|---|---|---|

| Nvidia GTX Titan | 6 GB | 1500 | $600 |

| Nvidia GTX Titan Black | 6 GB | 1707 | $700 |

| Nvidia GTX Titan Z | 12 GB | 2707 | $1593 |

| Nvidia Tesla K20 | 5 GB | 1173 | $1975 |

| Nvidia Tesla K20X | 6 GB | 1312 | $1975 |

| Nvidia Tesla K40 | 12 GB | 1430 | $3000 |

| Nvidia Quadro K6000 | 12 GB | 1732 | $3650 |

| Nvidia Tesla K80 | 24 GB | 2330 | $4246 |

CUDA can only be used on Nvidia GPUs, but it’s not the only GPGPGU API available. If you’re looking for double-precision floating point performance on OpenCL or DirectCompute, you will find similar performance and value with a GPU from AMD.

| Name | RAM | GFLOPS | Price |

|---|---|---|---|

| AMD Radeon HD 6990 | 4 GB | 1277 | ? |

| AMD Radeon HD 7990 | 6 GB | 1894 | $693 |

| AMD Radeon HD 8990 | 6 GB | 1946 | ? |

| AMD Radeon R9 295X2 | 8 GB | 1433 | $989 |

| AMD Radeon Sky 900 | 6 GB | 1478 | $1650 |

| AMD FirePro W8100 | 8 GB | 2109 | $995 |

| AMD FirePro W9100 | 16 GB | 2619 | $3100 |

| AMD FirePro S9150 | 16 GB | 2530 | $1999 |

| AMD FirePro S9170 | 32 GB | 2620 | ? |

| AMD FirePro S10000 | 6 GB | 1478 | $3290 |

The Hardware

Motherboard: MSI 970A SLI Krait Edition

CPU: AMD FX-8350

RAM: Corsair Vengeance Pro 4x8GB

Disk: Samsung 850 EVO 500GB

Power: Cooler Master V1000

Case: Cooler Master Storm Scout 2

Operating System: Microsoft Windows 10

All tests will be conducted in this standard consumer-grade PC with an 8-core CPU at 4.0GHz, 32GB RAM, solid state hard drive, and enough power and space to run two high-end GPUs.

The GPUs

Nvidia GeForce GTX Titan

Year: 2013

14 Cores, 1.2GHz, 6GB RAM, 2688 Stream Processors

This is currently the best value processor for double-precision floating point operations in CUDA.

The Nvidia Titan, Titan Black, and Titan Z can be configured to run double-precision floating point operations at a higher rate than normal, with a trade-off of slower single-precision performance and game graphics. In some of these benchmarks I will be testing both modes, identified as Titan (normal performance) and Titan DP (boosted double-precision).

Note: the newer Titan X does not have double-precision mode!

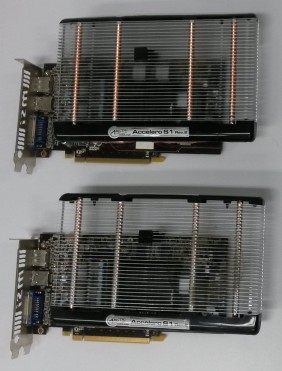

Nvidia GeForce GTX 680

Year: 2012

8 Cores, 1.2GHz, 4GB RAM, 1536 Stream Processors

This is my current graphics card at home. It plays all the games.

While it is a fine GPU on its own, the GTX 680 can also be used as a co-processor to assist a faster GPU (such as the Titan) during heavy computing workloads. In GPGPU computing tasks both cards will share the load. In games and graphics applications the primary card (Titan) will do graphics while the secondary card (680) handles computing tasks such as Nvidia PhysX (physics simulations on CUDA) or AMD TressFX (realistic hair simulation). This configuration will be identified as Titan+680.

AMD Radeon 5770

Year: 2009

10 Cores, 850MHz, 1GB RAM, 800 Stream Processors

I have two of these cards. I will be testing one individually, and then both working together in CrossFireX mode identified as 5770+5770.

With only 1GB RAM its memory space is too small to run some tests. It is still limited to 1GB RAM when both cards are combined – the two cards share the computing load, but they operate in a single memory space.

The Radeon 5770 does not have native support for double-precision floating point operations, although it is able to emulate double-floats in some applications.

AMD FX-8350 CPU

Year: 2012

8 Cores, 4.0GHz, 8MB Cache, 64 Stream Processors

This is not a GPU, it’s a CPU. It can’t run CUDA. But it can run OpenCL and native code, so it can compete with the GPUs in some tests.

The Tests

AIDA64

AIDA64 Extreme is a hardware information and benchmark suite for Windows.

If you’re reading this to find out which of these GPUs is the best at double-precision floating point calculations, you can stop reading now. We have a winner.

The FX-8350 beat the GPUs in this test because it has dedicated encryption acceleration features.

SiSoft Sandra 2015

Sandra 2015 is a benchmark suite for Windows.

Compute API Comparison

This test compares four different GPU computing APIs: CUDA, OpenCL, OpenGL, and DirectCompute.

Thankfully CUDA achieved the highest score for double-floats on the Titan, because that’s exactly what I bought it for! In the remaining Sandra tests I will use CUDA on the Nvidia cards and OpenCL on the others.

GPGPU Processing

A surprising show of strength from the 5770+5770, beating the 680 at single- and double-floats, and matching the Titan at single-floats. Somehow the Titan+680 achieved a higher single-float score than their individual scores added together. There’s something weird about this test.

GPGPU Cryptography

Settings: AES256+SHA2-512

The FX-8350 was unable to use its encryption acceleration features here, possibly because the test is run in OpenCL instead of native code.

GPGPU Financial Analysis Single-Precision

GPGPU Financial Analysis Double-Precision

GPGPU Scientific Analysis Single-Precision

GPGPU Scientific Analysis Double-Precision

GPGPU Bandwidth

The maximum interface transfer bandwidth for the GPUs is limited by the PCI Express 2.0 interface on the motherboard.

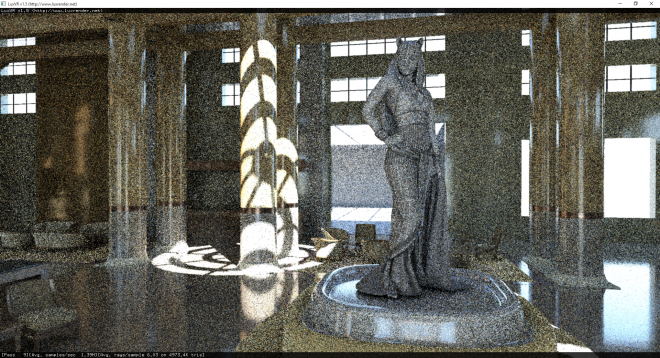

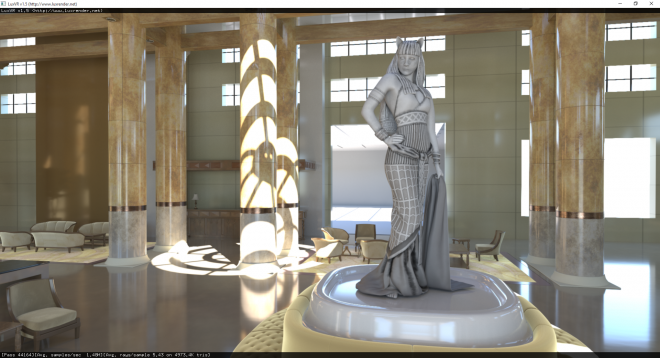

LuxMark 3.1

LuxMark is an OpenCL benchmark tool for Windows, Linux, and Mac. It renders images by simulating the flow of light according to physical equations.

Note: Radeon 5770 could not run this test because it requires more than 1GB RAM.

Graphics Benchmarks

3DMark 11 Basic Edition

3DMark 11 is a video card benchmark tool for Windows. It makes extensive use of DirectX 11 features including tessellation, compute shaders and multi-threading.

3DMark Advanced Edition

3DMark is a video card benchmark tool for Windows, Android, and iOS. It is the successor to 3DMark 11.

3DMark API Overhead feature test 1.2

Note: Radeon 5770 cannot run this test.

Unigine Heaven 4.0 Basic Edition

Heaven is a GPU stress-testing and benchmark tool for Windows, Linux and Mac. It makes comprehensive use of tessellation.

Unigine Valley 1.0 Basic Edition

Valley is a GPU stress-testing and benchmark tool for Windows, Linux and Mac.

Game Benchmarks

Doom 3

Year: 2004

Engine: id Tech 4

Settings: 1280×1024 Ultra, timedemo demo1

Half-Life 2: Lost Coast

Year: 2005

Engine: Source, Havok

Settings: 1920×1080 Maximum detail

Doom 3 and Half-Life 2 are unable to benefit from the 5770+5770 because their engines were made before it was possible to combine multiple GPUs.

Batman: Arkham Origins

Year: 2013

Engine: Unreal Engine 3, PhysX

Settings: 1920×1080 Maximum detail

The Titan+680 achieved clear gains here; the Titan was able to work on graphics exclusively while the 680 handled PhysX processing.

Tomb Raider

Year: 2013

Engine: Foundation, TressFX

Settings: 2560×1080 ‘Ultimate’ preset.

Note: Game is limited to 60 frames per second.

The Titan hit the maximum frame rate on its own. I’m not sure why the Titan+680 was slightly slower.

Bioshock Infinite

Year: 2013

Engine: Unreal Engine 3

Settings: 1920×1080 ‘UltraDX11 DDOF’ preset.

EVE Online

Year: 2003-2015

Engine: Trinity

Note: EVE Probe is the benchmark tool for EVE Online.

Batman: Arkham Knight

Year: 2015

Engine: Unreal Engine 3, Nvidia GameWorks

Settings: 1920×1080 Maximum detail.

Note: Radeon 5770 could not run this test because it requires more than 1GB RAM.

Conclusion

Titan’s double-precision mode performed exactly as expected, easily dominating the double-precision tests. The older Radeon 5770 surprised with strong results in a few tests, highlighting the architectural differences in GPU designs from Nvidia and AMD. I would be very interested to see how a newer AMD GPU compares in those tests.

The FX-8350 CPU took last place in nearly every test due to significantly less cores and streams than the GPUs, despite having more speed per core. It did win the AIDA64 AES test thanks to its encryption acceleration features.

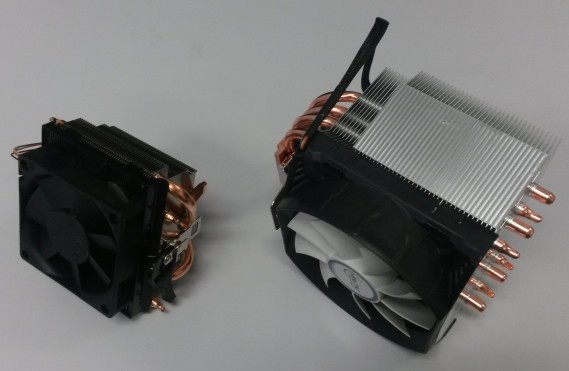

Upgrading the CPU cooler

To increase the longevity of the FX-8350 CPU, I replaced the standard cooler with a bigger one: the Arctic Cooling Freezer 13. As you can see in the final picture below, the cooler overlaps the RAM slots. It required some cutting (the cooler, not the RAM) to make it fit.