RX Vega 56 launched in August 2017 with a blower-style stock cooler that is incapable of adequately handling the excess heat from this GPU at its full performance level. The default settings deliberately limit the power consumption and fan speed to keep it running comfortably within its physical limitations. Can it be improved with a different GPU cooler?

The Radeon driver software includes a tool called WattMan for adjusting the GPU and HBM clock speeds and voltage, fan speed, and total GPU power limit. Setting the fan speed and power as high as they’ll go yields an immediate improvement, albeit with uncomfortably loud fan noise. Maximum power consumption is limited to 210 W by the Vega 56 BIOS firmware on the GPU, and the easiest way to go higher is to replace that with BIOS firmware from the RX Vega 64 (up to 295 W) or RX Vega 64 Liquid Cooling (up to 345 W).

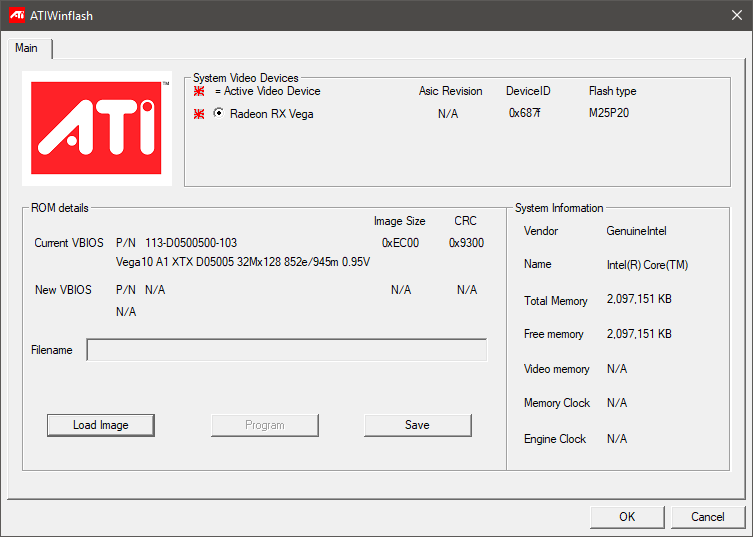

This GPU includes a small switch on the top to choose from 2 different BIOS. One of them is write-protected, the other can be overwritten (or flashed) with any RX Vega BIOS using ATIWinflash. You can find a collection of user-submitted BIOS files for most GPUs at TechPowerup.

To get the most out of Vega 56 with the original cooler I recommend using the Vega 64 BIOS and sliding the power limit all the way to +50% in WattMan to give the card some more breathing space and higher default clock speeds. The GPU will adjust its speed automatically to keep temperatures below 85C, so raise the fan speed as high as you can tolerate it.

For maximum performance the Vega 64 water-cooled edition BIOS has the highest power limit, but there are two caveats to consider: the default GPU clock speed of 1750 MHz is too high for most Vega 56 GPUs (although you might get lucky), and the temperature limit is locked at 70C.

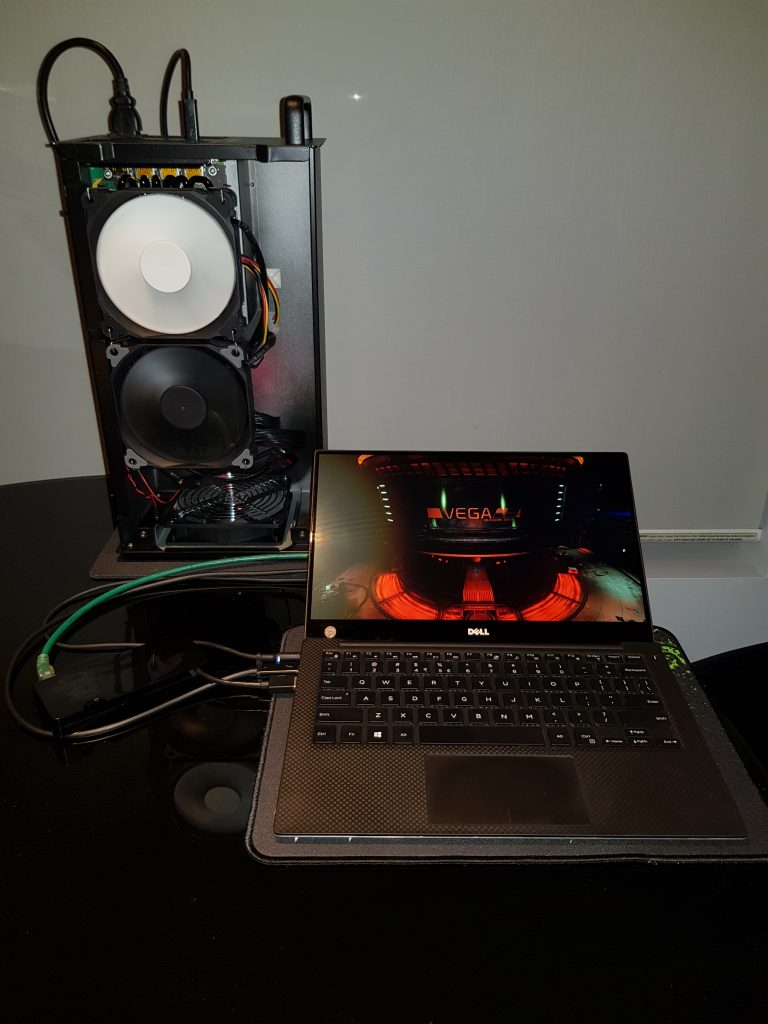

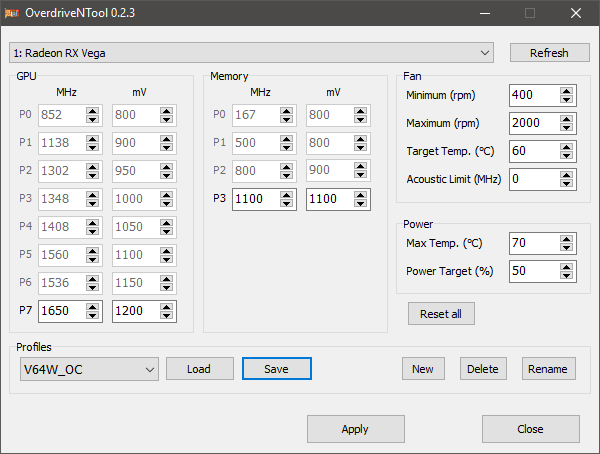

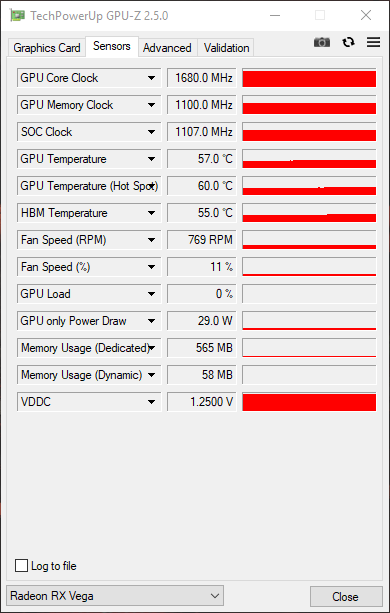

I have installed my Vega 56 in an AKiTiO Node Thunderbolt 3 external graphics enclosure connected to a Dell XPS 13 9350. The Node has been upgraded with a 600w power supply. I’m using the Vega 64 water-cooled edition BIOS underclocked with OverdriveNtool (because WattMan is annoying) and I am monitoring and logging GPU status with GPU-Z.

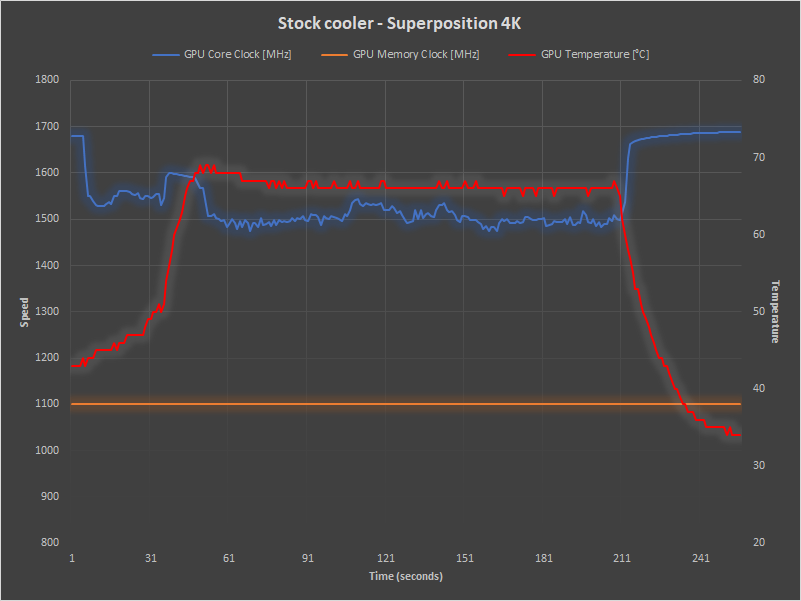

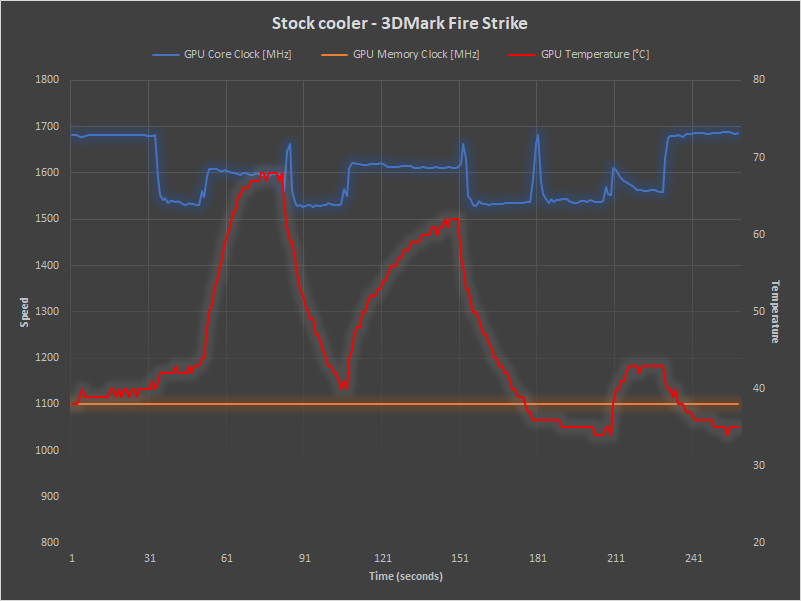

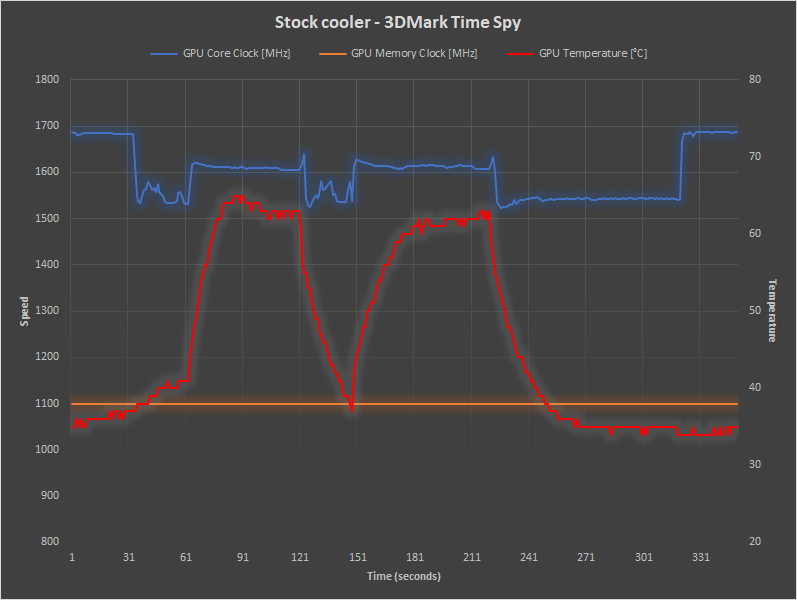

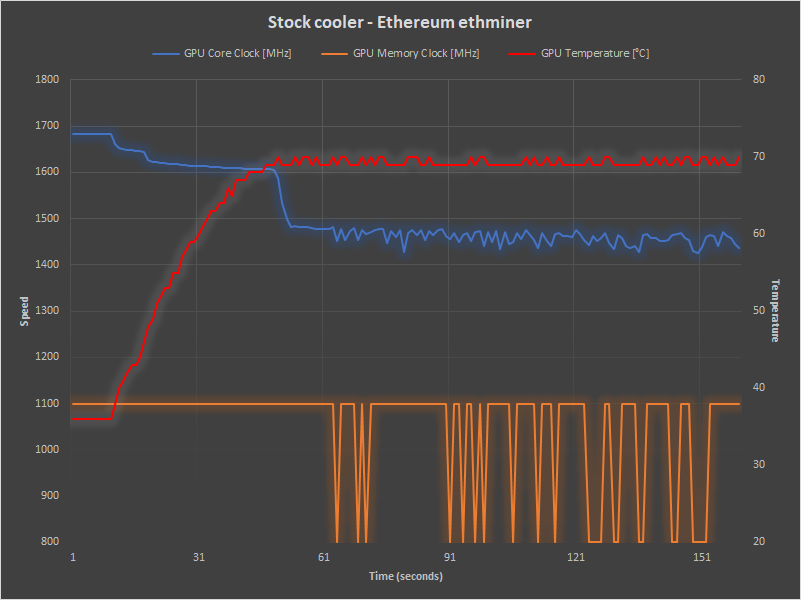

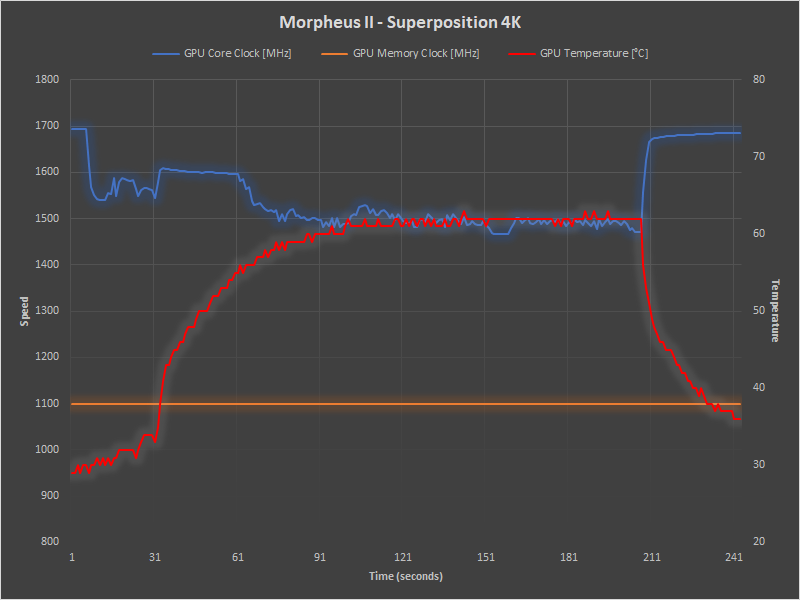

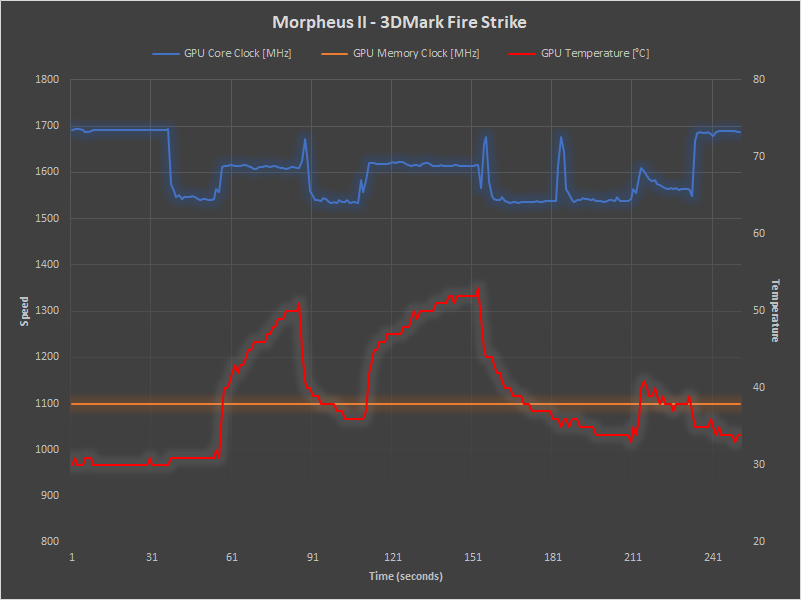

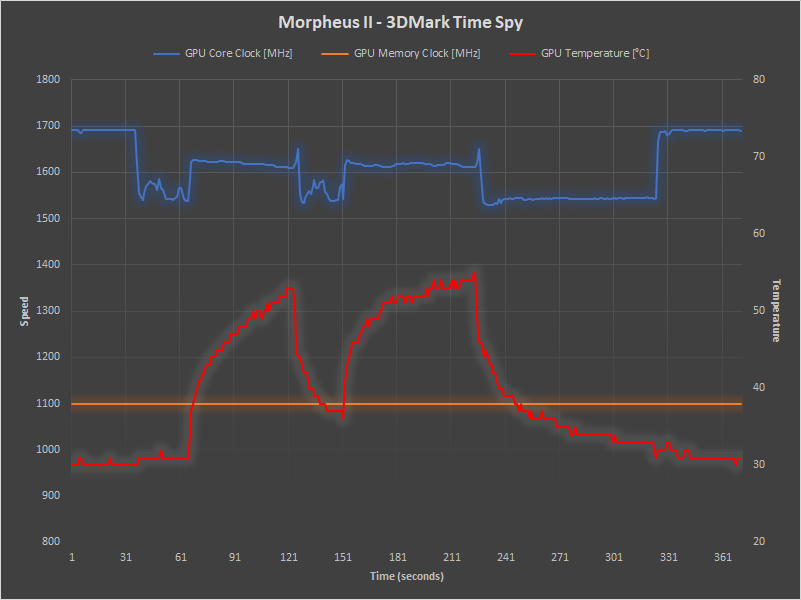

With GPU clock set to 1650 MHz (the real clock speed can vary higher or lower depending on its mood) the idle temperature is 35C. To see what happens under load I am using four stress-test applications: 3DMark Fire Strike, 3DMark Time Spy, Unigine Superposition, and Ethereum ethminer.

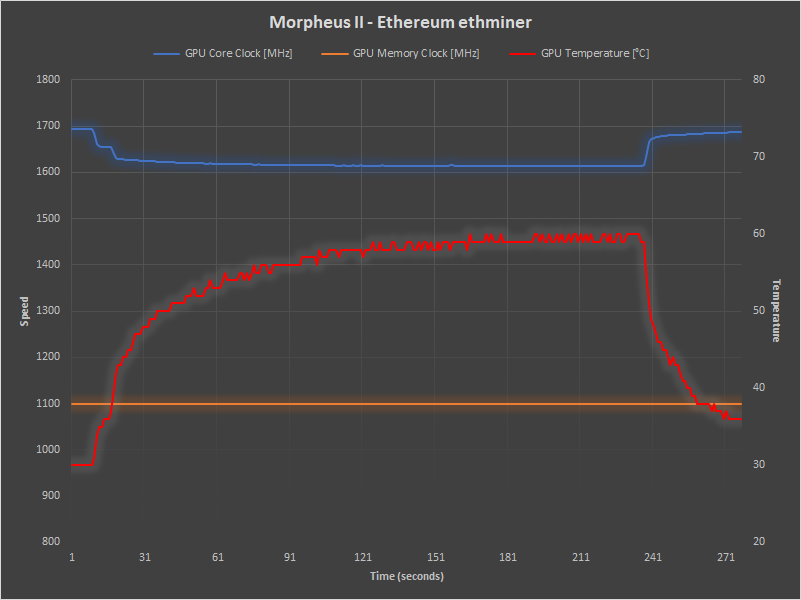

The results show the GPU core clock speed running above 1600 MHz for a short time at the start of each test before dropping down to near 1500 MHz under load. HBM speed (GPU memory clock) remained stable throughout the graphics tests and only throttled during Ethereum mining, which stresses the GPU in a different way.

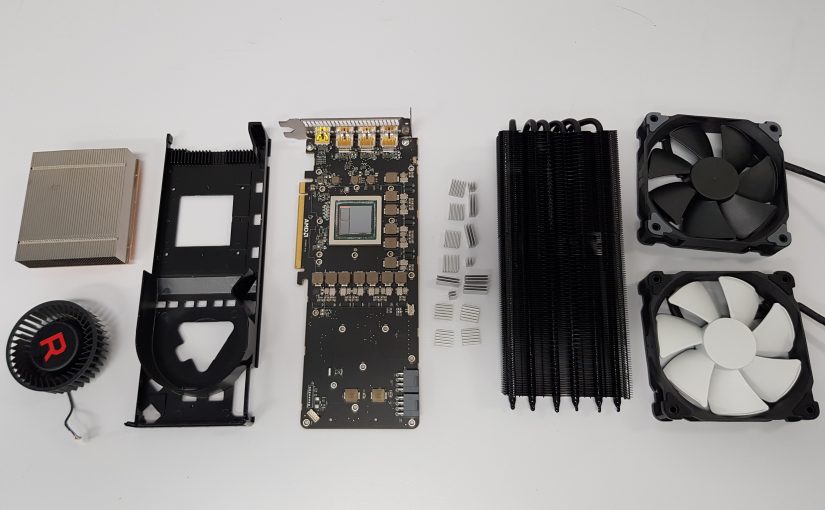

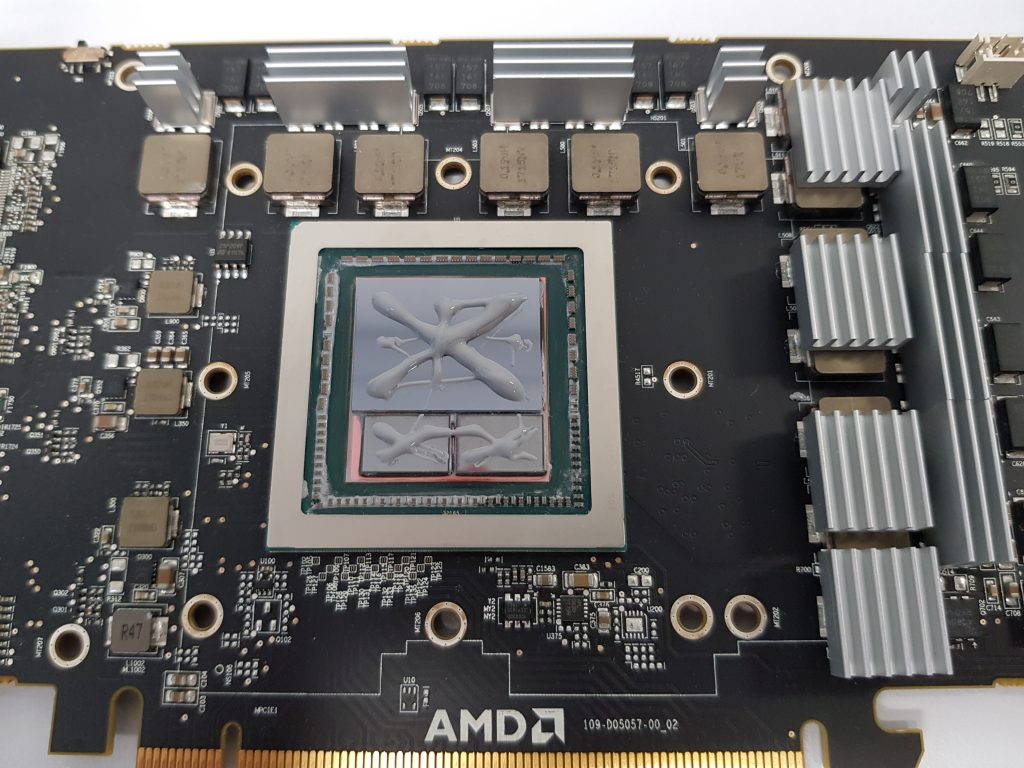

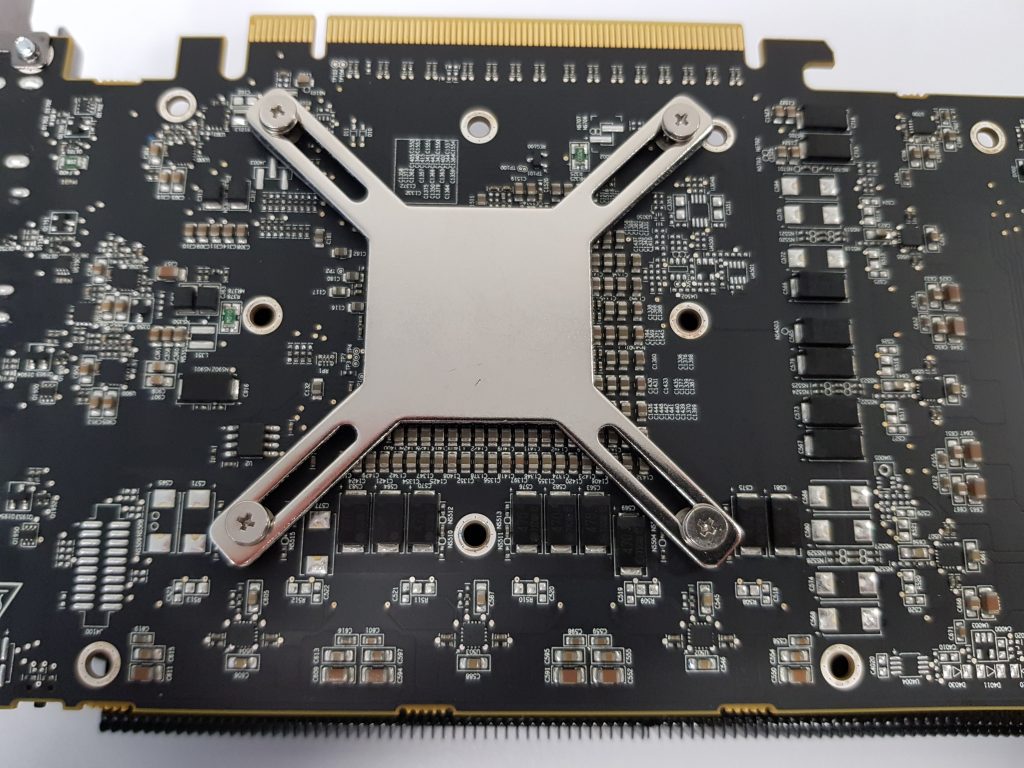

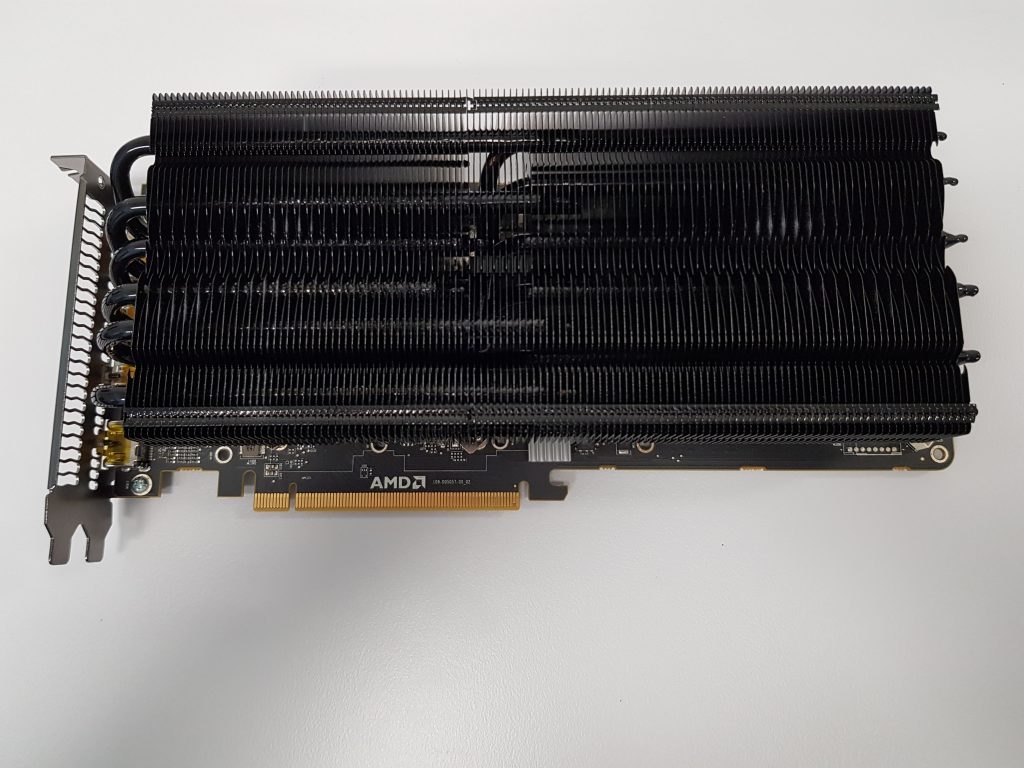

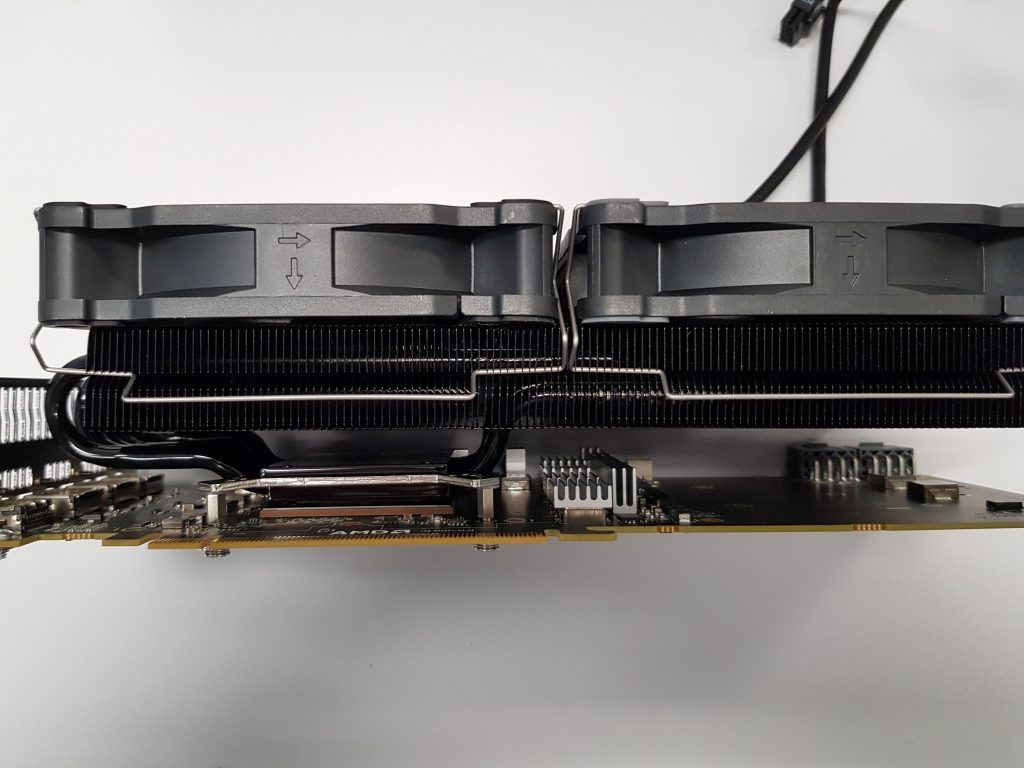

Now let’s see what happens when the stock cooler is replaced with a larger air cooler: the Raijintek Morpheus II.

Running the stress tests again with the larger Morpheus II cooler produced surprising results: almost no difference to clock speeds in the graphical tests despite temperatures being up to 15C lower than before. Scores in these benchmarks improved less than 1%. Only the Ethereum mining test seemed to benefit, with GPU clock and HBM speeds now remaining high and stable resulting in a 29% improvement in mining hash rate. This is good news for other OpenCL compute workloads.

Why didn’t performance improve in the graphics tests? The most likely limiting factors are the laptop CPU, system RAM, or Thunderbolt 3 interface acting as a bottleneck. It could also be that the graphics tests don’t put much strain on the HBM modules like mining does, so the total heat output didn’t actually require better cooling. Regardless, this modification was definitely worthwhile for reducing the fan noise. The two large fans on the Morpheus II are barely audible even when running at full speed.